Multi processing in NodeJS

Multi processing in NodeJS

Node Js is a great tool for creating the javascript based applications. Developers can create everything from mobile development: Native Script, Ionic, Aurelia, to web development: Express Js, Hapi Js, to desktop development: Electron Js. Its tough to imagine this, seemingly all purpose tool, is single threaded. Yes most Node Js applications are single threaded. But there is a way to make Node Js multi-process with Child Processes.

Node JS Single Process

First let's take a brief look at the single process that Node runs on and how it manages to stay fast and responsive. Node Js makes use of non-blocking or asynchronous I/O and the Event Loop. The asynchronous I/O allows the single main process to continue execution after executing a line of code. The example below shows the difference between synchronous and asynchronous code.

const fs = require('fs');

const fileContents = fs.readFileSync('path/to/file'); // Blocks execution

console.log(fileContents);

fs.readFile('path/to/file', (err, fileContents) => {

if (err) {

throw err;

}

console.log(fileContents);

}); // Does not block execution

The two readFile functions above achieve the same result, except one does this asynchronously. The asynchronous code allows the single thread to continue executing other asynchronous functions. As soon as the fs.readFile function is called the callback function provided in the second parameter, is queued in the Event Loop. That function will then be called when data is available from the file.

For a detailed look at the Node Js Event Loop checkout the excellent blog post by RisingStack

Node Js achieves this fast asynchronous I/O by using the libuv. This library is used in many projects to achieve asynchronous I/O.

Child Process

A Child Process in Node Js is module that can used to execute CLI programs. As you can imagine this is really powerful when it comes executing other tools from within a Node script. For example you could execute a Bash tool like ls right from javascript. Python scripts are also executed from the CLI, so if one of your tasks can only be achieved in python, you can still integrate that code into your Node script. And this is true for any CLI based program, including Node itself. So let's look at some code:

const execFile = require('child_process').execFile;

// Callback based

execFile('node', ['--version'], (error, stdout, stderr) => {

if (error) {

throw error;

}

console.log(stdout);

console.log(stderr);

});

// OR

// Event based

const child = execFile('node', ['--version']);

child.stdout.on('data', (data) => {

console.log(data);

});

child.stderr.on('data', (data) => {

console.log(data);

})

child.on('error', (error) => {

throw error;

});

Both of the above functions achieve the same result with a different approach. Using the Callback based function, the callback is called when the child process ends. It also uses a buffer to buffer the output of the child process until its is finished. The Event based function streams the data as the child process sends it out. The above is an example of running a Node command and displaying its output.

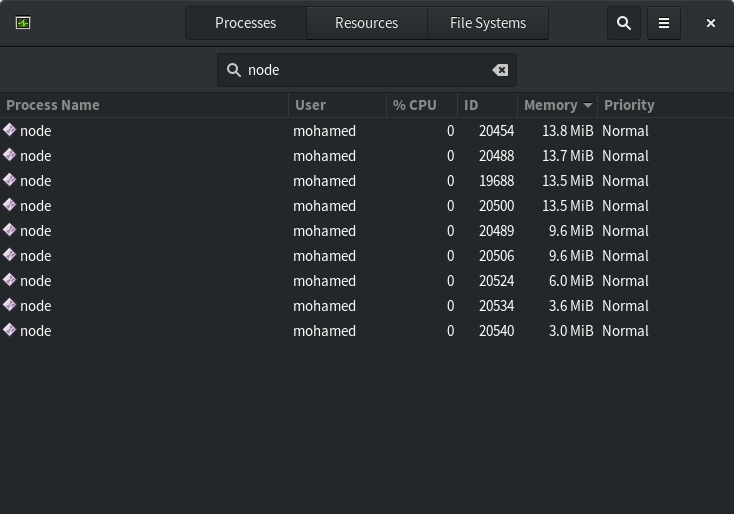

All commands given to Child Processes are executed in their own separate process. A new shell shell is spawned for each process, and whatever number crunching is necessary happens in that process, leaving the main process free to do its thing. This kind of multi-processing can be really powerful for many use cases. Below I give an example of one.

*[CLI]: Command Line Interface

Use Case

One use case for Node Child Processes is if you want to search through a very large CSV for records using IDs from another CSV. A large CSV (in my experience so far I've had to work with 20GB files) can be a pain to process and extract records out of. Especially if you have to search those records one by one. Node Js provides a really nice set of modules that can be used with Child Process to achieve a multi-process large CSV parser. Let's look at an example.

Let's pretend that we have following set of data.

id;point1;point2;point3;...(200 more attributes)

1;a;b;c;...(200 more values)

2;a;b;c;...(200 more values)

3;a;b;c;...(200 more values)

4;a;b;c;...(200 more values)

.

.

.

(5 million more records)

The above is not uncommon in the real world. Often times you do have process CSVs that are many millions of records with hundreds of attributes. These files can be many gigabytes in size.

Now let's say that we are given the following file as a reference to extract data from.

id;point1

1;a

12;a

13;a

19;a

200;a

4253;a

23526;a

.

.

.

(1000 more records)

This reference file of IDs will tell us which records we need to extract from the large data set. If we do this one by one it will certainly get done, but it will take longer and you won't able to meet your deadline. Let's look at how we run some parallel processes to make this go a little faster.

Now let's take a code that searches the larger file using the IDs from the reference file:

const readline = require('readline');

const fs = require('fs');

const haystack = process.argv[2];

const needle = process.argv[3];

if (!haystack && !needle) {

throw new Error(`Missing argument`);

}

const stream = fs.createReadStream(haystack);

const rl = readline.createInterface({

input: stream

});

rl.on('line', (line) => {

line = line.split(';');

if (line[0]) {

if (line[0] === needle) {

console.log(`${JSON.stringify(line)}`);

}

}

});

The code above finds and outputs all instances of the needle that is passed in from the reference file. We create a stream because we cannot load the large file into memory. And we use the readline module to read the file line by line. We are passing in the haystack and the needle constants. Now let's look at the code that passes those constants in to this code.

const fs = require('fs');

const readline = require('readline');

const async = require('async');

const os = require('os');

const execFile = require('child_process').execFile;

let check = [];

const NUMBER_OF_PROCESS = os.cpus().length;

const referenceStream = fs.createReadStream('path/to/referenceFile.csv');

const rl = readline.createInterface({

input: referenceStream

});

rl.on('line', (line) => {

line = line.split(';');

let needle = line[0].trim();

if (!check.includes(needle)) {

check.push(needle);

}

});

rl.on('close', () => {

async.eachLimit(check, NUMBER_OF_PROCESS, (item, callback) => {

const child = execFile('node',

['childProcess.js', 'path/to/largeFile.csv', item],

(error, stdout, stderr) => {

if (error) {

callback(error)

}

console.log(stdout.toString());

});

}, (err) => {

if (err) {

console.log(`Error ${err}`);

} else {

console.log('Done');

}

});

});

The above code first creates a stream of the reference file and on each line, builds an array of unique values to use as our needles. Then once that stream is closed, we use the excellent async library to control the number of processes that we create. The code above creates as many process as the number of CPU cores on the machine. Using that number and the eachLimit function from the async library, the code creates the maximum number of process and then keeps creating more when one process finishes. We use the execFile function, same as before, except now we provide our own script as the parameter. We also provide the parameters that script needs to work. Now when executing the above file with Node, it will create multiple process and output to the terminal everything the child processes output.

There are more possibilities with Child Processes. Hope this was useful :-)

*[CSV]: Comma Separated Value