Scalable System Architecture with Docker, Docker Flow, and Elastic Stack: Frontend Services

Continuing the docker infrastructure series, we now create our frontend services.

Scalable System Architecture

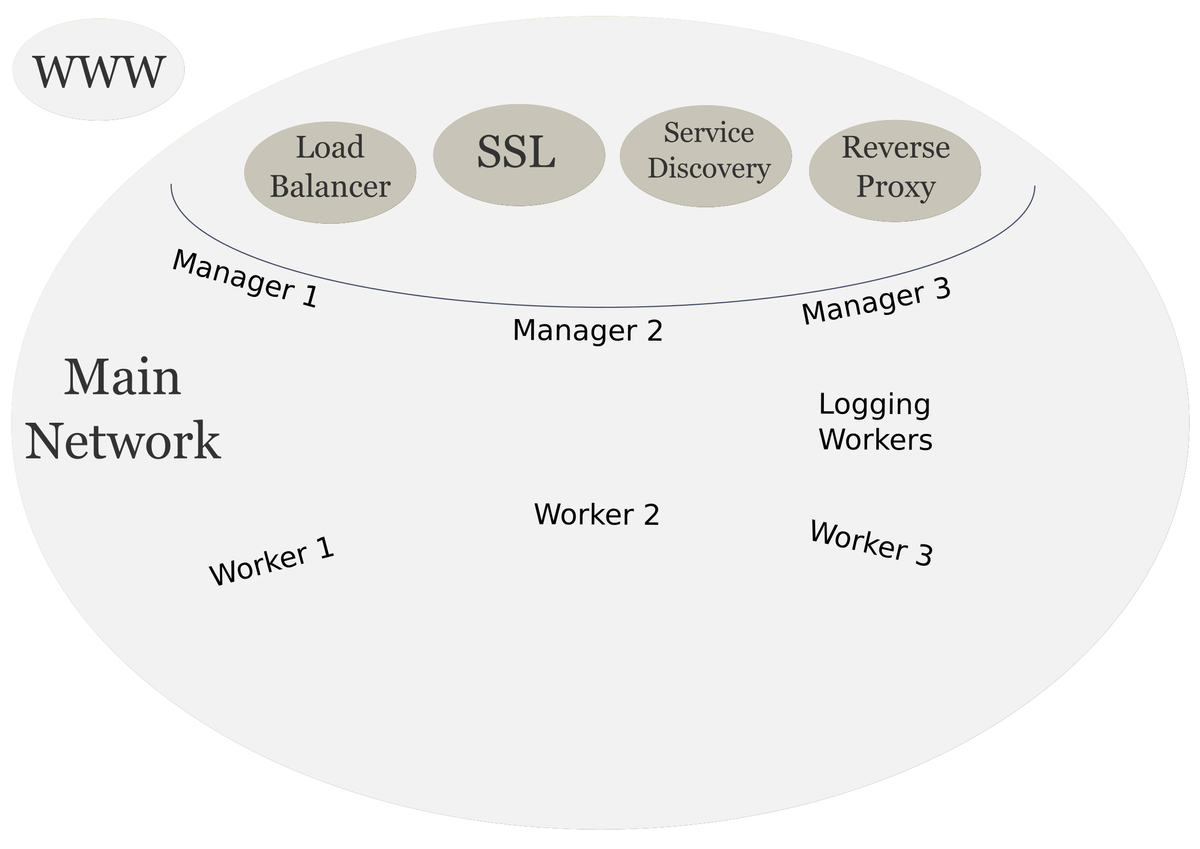

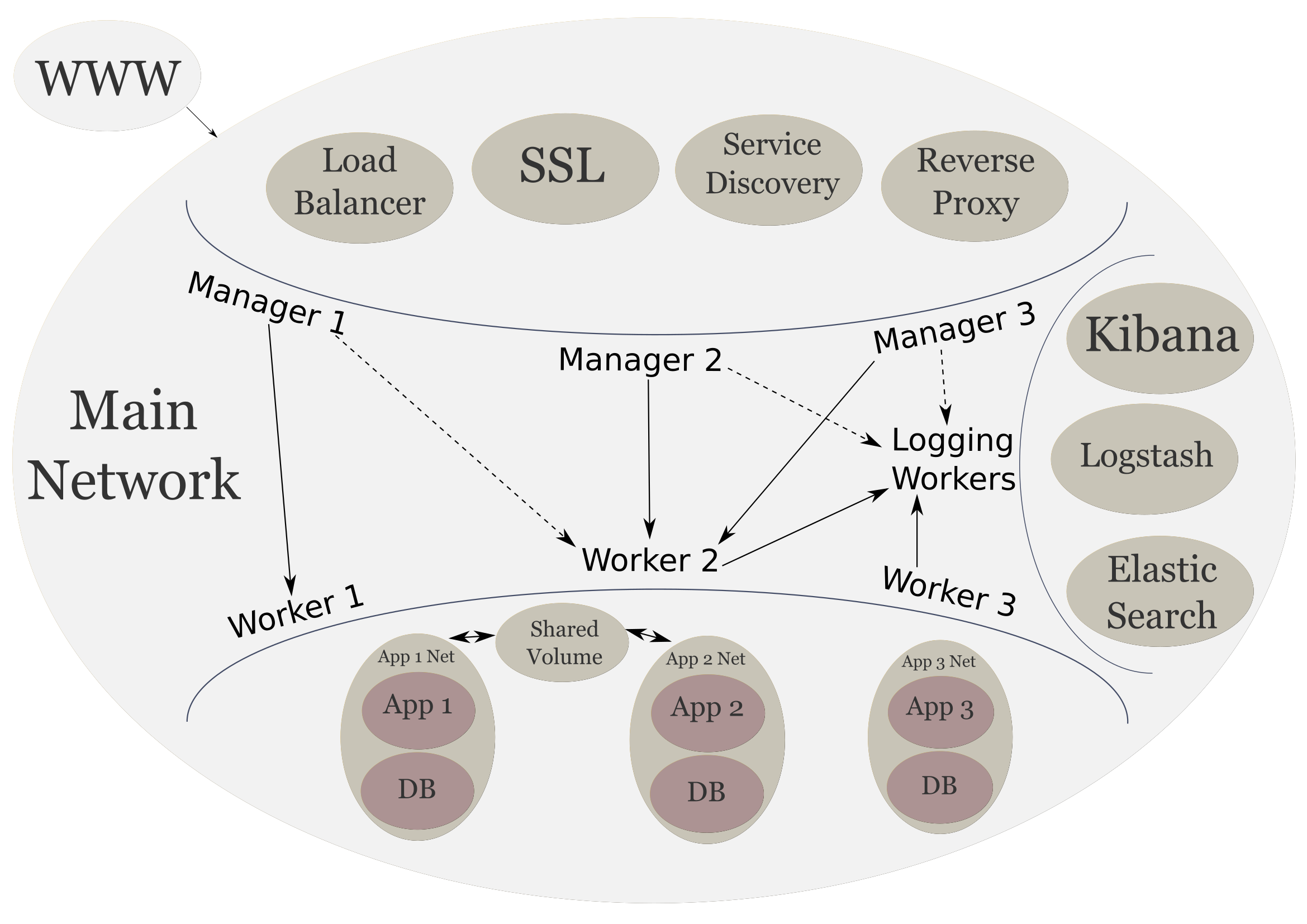

In the previous post, we provisioned our swarm cluster with three managers, three workers, and one logging worker. Now we will be creating the frontend services that will face the internet.

As before the following is the high level diagram of the our intended infrastructure.

- Parts

- Technologies

- docker-compose utility

- Docker Flow: Swarm Listener

- Docker Flow: Proxy

- Docker Flow: Let's Encrypt

- Frontend Services

- Swarm Listener

- Proxy

- Let's Encrypt

- Docker Compose Config

- Up Next

Parts

- Scalable System Architecture with Docker, Docker Flow, and Elastic Stack: System Provisioning

- Scalable System Architecture with Docker, Docker Flow, and Elastic Stack: Frontend Services (current)

- Scalable System Architecture with Docker, Docker Flow, and Elastic Stack: Logging Stack

- Scalable System Architecture with Docker, Docker Flow, and Elastic Stack: Backend Services

- Scalable System Architecture with Docker, Docker Flow, and Elastic Stack: Limitations and Final Thoughts

Technologies

As before we can install docker for our platform of choice. We will be using docker, and docker-machine utilities to create our services.

We will use Docker Flow: Swarm Listener, Proxy, and Let's Encrypt for our frontend services. These will provide our service discovery, reverse proxy and load balancing, and SSL services respectively.

Docker Flow: Swarm Listener

Docker Flow: Swarm Listener is a companion to Proxy that updates the configuration of the proxy dynamically as new services are added to the cluster. For example, if we have both Proxy and Swarm Listener running, and we add our new website to the cluster, Swarm Listener will automatically update the Proxy config so that we can actually access our website.

Docker Flow: Proxy

Docker Flow: Proxy is the reverse proxy that we will use to route and load balance traffic within our cluster. This docker image uses HAProxy in the background to load balance. Another benefit to using this proxy is that it has built in support for swarm mode so no special configuration is necessary.

Docker Flow: Let's Encrypt

Docker Flow: Let's Encrypt is another companion project to Proxy that uses the Let's Encrypt certificate authority to get free SSL certificates for HTTPS. The catch with these certificates is that they are only valid for 90 days. This is the intended behaviour as they don't want expired domains and certificates out on the internet. This companion gets around this limitation be automatically setting up an auto-renew cron job once it starts. The certificates themselves are only renewed once they are 60 days or older.

Frontend Services

Now we will start with creating the frontend services. These services can be created via individual docker commands or all together using a docker-compose.yml file. We will be going over both methods. First we will have a look at the docker commands to learn the details of what is going on with the services. Then we will create a single YAML file to deploy our entire stack with one command.

Swarm Listener

First we have to start the Swarm Listener which will provide us with service discovery features. We can start the listener with the following command:

docker service create --name swarm-listener \

--network main \

--mount "type=bind,source=/var/run/docker.sock,target=/var/run/docker.sock" \

-e DF_NOTIF_CREATE_SERVICE_URL=http://proxy:8080/v1/docker-flow-proxy/reconfigure \

-e DF_NOTIF_REMOVE_SERVICE_URL=http://proxy:8080/v1/docker-flow-proxy/remove \

--constraint 'node.role == manager' \

vfarcic/docker-flow-swarm-listener

The above command creates a service named swarm-listener. It uses the main network that we created in the previous post. We also mount and bind the docker.sock socket and assign the HAProxy reconfigure and remove URLs to the DF_NOTIF_CREATE_SERVICE_URL and DF_NOTIF_REMOVE_SERVICE_URL environment variables. Lastly we also added a constraint to the service which restricts it to manager nodes.

Proxy

Next we will start the reverse proxy which will also load balance the incoming requests. We can start the proxy with the following command:

docker service create --name proxy \

-p 80:80 \

-p 443:443 \

-p 8080:8080 \

--network main \

--constraint 'node.role == manager' \

-e MODE=swarm \

-e LISTENER_ADDRESS=swarm-listener \

vfarcic/docker-flow-proxy

Here we name our container proxy. We publish ports 80, and 443 for web access to our proxy. We also publish port 8080 so the swarm-listener can reconfigure the proxy configuration when we create new services. Like before we tell the container to use the main network and restrict it to manager nodes using the node.role == manager constraint. Lastly we use the MODE and LISTENER_ADDRESS environment variables to inform the container that it is working in a swarm and which container is the listener.

Let's Encrypt

Finally let's add SSL support to our system. We will be using the Docker Flow: Let's Encrypt companion to achieve this.

A few caveats to using this companion is that there must only be one instance of it on the swarm, it must run on the same node (you can move it to a different node, but it must only stay on that node), and there must be a folder created on the node that this runs on.

So to work with this companion, we first have to pick which node we want to run it on. We can do that using the following the command:

docker node ls

We pick a machine from the resulting list and copy its id. Let's go with manager-1.

Second we have to create the /etc/letsencrypt folder on the machine we want the companion to run on.

docker-machine ssh manager-1 mkdir -p /etc/letsencrypt

Now we can start the service with a single instance.

docker service create --name letsencrypt-companion \

--label com.df.notify=true \

--label com.df.distribute=true \

--label com.df.servicePath=/.well-known/acme-challenge \

--label com.df.port=80 \

-e DOMAIN_1="('customdomain.com' 'www.customdomain.com')" \

-e DOMAIN_COUNT=1 \

-e CERTBOT_EMAIL="[email protected]" \

-e PROXY_ADDRESS="proxy" \

-e CERTBOT_CRON_RENEW="('0 3 * * *' '0 15 * * *')" \

--network main \

--mount type=bind,source=/etc/letsencrypt,destination=/etc/letsencrypt \

--constraint 'node.id == <id>' \

--replicas 1 \

hamburml/docker-flow-letsencrypt:latest

Here we use the labels to notify the swarm-listener and the proxy to use the let's encrypt companion. We use environment variables here to tell the container which domain we want to secure, how many domains we are securing, the email to use for let's encrypt, the container name of the reverse proxy, and how often we want to renew the certificate using cron syntax. As before we use the main network and we bind the directory we created earlier. We use the node.id constraint to make sure it only starts on that node. Lastly we use the replicas option to make sure there is only one instance of this companion running.

- Note: The domains you specify must be registered domains. Local domains will not work. For local testing we can use insecure HTTP requests.

Docker Compose Config

If web don't want to individually start each service, we can deploy an entire stack. Docker 1.13 has the ability to use docker-compse.yml files to deploy whole stacks of services at once. To use this feature we copy the following to docker-compose.yml.

version: "3"

services:

swarm-listener:

image: vfarcic/docker-flow-swarm-listener

networks:

- proxy

volumes:

- /var/run/docker.sock:/var/run/docker.sock

environment:

- DF_NOTIF_CREATE_SERVICE_URL=http://proxy:8080/v1/docker-flow-proxy/reconfigure

- DF_NOTIF_REMOVE_SERVICE_URL=http://proxy:8080/v1/docker-flow-proxy/remove

deploy:

placement:

constraints:

- node.role == manager

proxy:

image: vfarcic/docker-flow-proxy

ports:

- "80:80"

- "443:443"

- "8080:8080"

networks:

- proxy

environment:

- LISTENER_ADDRESS=swarm-listener

- MODE=swarm

deploy:

replicas: 2

placement:

constraints:

- node.role == manager

letsencrypt-companion:

image: hamburml/docker-flow-letsencrypt

depends_on:

- swarm-listener

- proxy

labels:

- "com.df.notify=true"

- "com.df.distribute=true"

- "com.df.servicePath=/.well-known/acme-challenge"

- "com.df.port=80"

environment:

- DOMAIN_1="('customdomain.com' 'www.customdomain.com')"

- DOMAIN_COUNT=1

- CERTBOT_EMAIL="[email protected]"

- PROXY_ADDRESS="proxy"

- CERTBOT_CRON_RENEW="('0 3 * * *' '0 15 * * *')"

networks:

- proxy

volumes:

- letsencrypt:/etc/letsencrypt

deploy:

replicas: 1

placement:

constraints:

- node.id == <id>

volumes:

letsencrypt:

networks:

proxy:

external: true

This compose file defines all of same environment variables, labels, constraints, and volumes as before. With one exception, the compose file uses a named volume in place of /etc/letsencrypt. This is more convenient because we don't have to create the directory on the host.

To deploy our stack we need to execute the following command:

docker stack deploy -c docker-compose.yml frontend

To verify that everything is running we can use:

docker service ls

### OUTPUT ###

ID NAME MODE REPLICAS IMAGE

5m1ggbb5ic6j frontend_swarm-listener replicated 1/1 vfarcic/docker-flow-swarm-listener:latest

smj7l18621hv frontend_proxy replicated 2/2 vfarcic/docker-flow-proxy:latest

yt1d2j2oq1or frontend_letsencrypt-companion replicated 1/1 hamburml/docker-flow-letsencrypt:latest

we see that all replicas are running properly. To check a detailed status of a particular service:

docker service ps frontend_letsencrypt-companion

### OUTPUT ###

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

zn9bi36yb5p1 frontend_letsencrypt-companion.1 hamburml/docker-flow-letsencrypt:latest manager-1 Running Running 24 minutes ago

If there is every a problem where the service status is stuck at pending then we must remove that service and make sure the configuration for that service is correct. We can remove a service by using:

docker service rm frontend_letsencrypt-companion

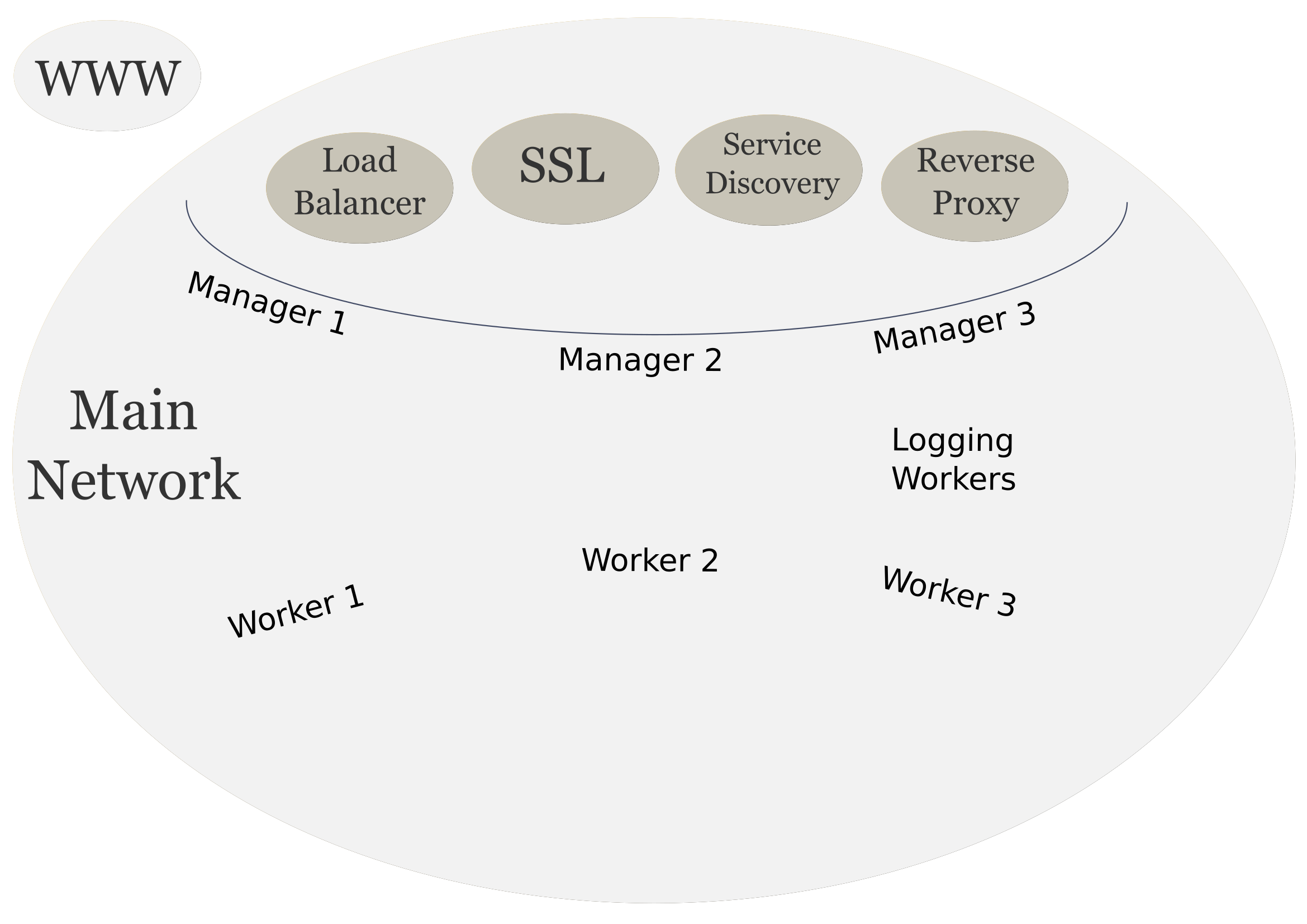

The following is what our looks like now:

Up Next

In the next section we will look how can deploy our custom applications as the backend services to our infrastructure.